Go学习笔记(十一):编写⾼性能的Go程序

2020-05-13 13:11:55别让性能被“锁”住

我们来看一段代码

var cache map[string]string

const NUM_OF_READER int = 40

const READ_TIMES = 100000

func init() {

cache = make(map[string]string)

cache["a"] = "aa"

cache["b"] = "bb"

}

func lockFreeAccess() {

var wg sync.WaitGroup

wg.Add(NUM_OF_READER)

for i := 0; i < NUM_OF_READER; i++ {

go func() {

for j := 0; j < READ_TIMES; j++ {

_, err := cache["a"]

if !err {

fmt.Println("Nothing")

}

}

wg.Done()

}()

}

wg.Wait()

}

func lockAccess() {

var wg sync.WaitGroup

wg.Add(NUM_OF_READER)

m := new(sync.RWMutex)

for i := 0; i < NUM_OF_READER; i++ {

go func() {

for j := 0; j < READ_TIMES; j++ {

m.RLock()

_, err := cache["a"]

if !err {

fmt.Println("Nothing")

}

m.RUnlock()

}

wg.Done()

}()

}

wg.Wait()

}

这段程序一个没有锁,一个有锁。我们看一下测试结果

func BenchmarkLockFree(b *testing.B) {

b.ResetTimer()

for i := 0; i < b.N; i++ {

lockFreeAccess()

}

}

//169 6618595 ns/op 77 B/op 1 allocs/op

func BenchmarkLock(b *testing.B) {

b.ResetTimer()

for i := 0; i < b.N; i++ {

lockAccess()

}

}

//8 160448100 ns/op 10033 B/op 27 allocs/op

从结果我们可以看到LockFree要比Lock快了2个数量级。 上面例子之所以使用RWLock是因为go语言内置的map是非协程安全的,我们需要加锁了保证协程安全。而对map加锁对性能影响很大,go语言给我们提供了sync.Map,它是一个协程安全的map。

sync.Map

- 适合读多写少,且 Key 相对稳定的环境

- 采⽤了空间换时间的⽅案,并且采⽤指针的⽅式间接实现值的映射,所以存储空间会较 built-in map ⼤

https://my.oschina.net/qiangmzsx/blog/1827059

Concurrent Map

- 适⽤于读写都很频繁的情况

https://github.com/easierway/concurrent_map

各种map的测试

1.定义Map接口

type Map interface {

Set(key interface{}, val interface{})

Get(key interface{}) (interface{}, bool)

Del(key interface{})

}

2.rw_map实现

type RWLockMap struct {

m map[interface{}]interface{}

lock sync.RWMutex

}

func (m *RWLockMap) Get(key interface{}) (interface{}, bool) {

m.lock.RLock()

v, ok := m.m[key]

m.lock.RUnlock()

return v, ok

}

func (m *RWLockMap) Set(key interface{}, value interface{}) {

m.lock.Lock()

m.m[key] = value

m.lock.Unlock()

}

func (m *RWLockMap) Del(key interface{}) {

m.lock.Lock()

delete(m.m, key)

m.lock.Unlock()

}

func CreateRWLockMap() *RWLockMap {

m := make(map[interface{}]interface{}, 0)

return &RWLockMap{m: m}

}

3.sync_map实现

func CreateSyncMapBenchmarkAdapter() *SyncMapBenchmarkAdapter {

return &SyncMapBenchmarkAdapter{}

}

type SyncMapBenchmarkAdapter struct {

m sync.Map

}

func (m *SyncMapBenchmarkAdapter) Set(key interface{}, val interface{}) {

m.m.Store(key, val)

}

func (m *SyncMapBenchmarkAdapter) Get(key interface{}) (interface{}, bool) {

return m.m.Load(key)

}

func (m *SyncMapBenchmarkAdapter) Del(key interface{}) {

m.m.Delete(key)

}

4.concurrent_map实现

import "github.com/easierway/concurrent_map"

type ConcurrentMapBenchmarkAdapter struct {

cm *concurrent_map.ConcurrentMap

}

func (m *ConcurrentMapBenchmarkAdapter) Set(key interface{}, value interface{}) {

m.cm.Set(concurrent_map.StrKey(key.(string)), value)

}

func (m *ConcurrentMapBenchmarkAdapter) Get(key interface{}) (interface{}, bool) {

return m.cm.Get(concurrent_map.StrKey(key.(string)))

}

func (m *ConcurrentMapBenchmarkAdapter) Del(key interface{}) {

m.cm.Del(concurrent_map.StrKey(key.(string)))

}

func CreateConcurrentMapBenchmarkAdapter(numOfPartitions int) *ConcurrentMapBenchmarkAdapter {

conMap := concurrent_map.CreateConcurrentMap(numOfPartitions)

return &ConcurrentMapBenchmarkAdapter{conMap}

}

5.测试

const (

NumOfReader = 100

NumOfWriter = 100

)

func benchmarkMap(b *testing.B, hm Map) {

for i := 0; i < b.N; i++ {

var wg sync.WaitGroup

for i := 0; i < NumOfWriter; i++ {

wg.Add(1)

go func() {

for i := 0; i < 100; i++ {

hm.Set(strconv.Itoa(i), i*i)

hm.Set(strconv.Itoa(i), i*i)

hm.Del(strconv.Itoa(i))

}

wg.Done()

}()

}

for i := 0; i < NumOfReader; i++ {

wg.Add(1)

go func() {

for i := 0; i < 100; i++ {

hm.Get(strconv.Itoa(i))

}

wg.Done()

}()

}

wg.Wait()

}

}

func BenchmarkSyncmap(b *testing.B) {

b.Run("map with RWLock", func(b *testing.B) {

hm := CreateRWLockMap()

benchmarkMap(b, hm)

})

b.Run("sync.map", func(b *testing.B) {

hm := CreateSyncMapBenchmarkAdapter()

benchmarkMap(b, hm)

})

b.Run("concurrent map", func(b *testing.B) {

superman := CreateConcurrentMapBenchmarkAdapter(199)

benchmarkMap(b, superman)

})

}

结果分析:

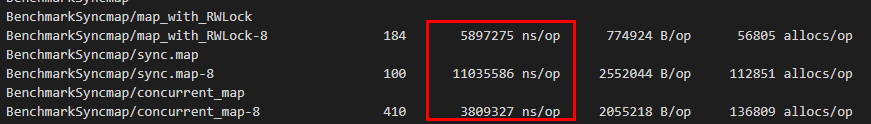

1.当NumOfReader=100,NumOfWriter=100时

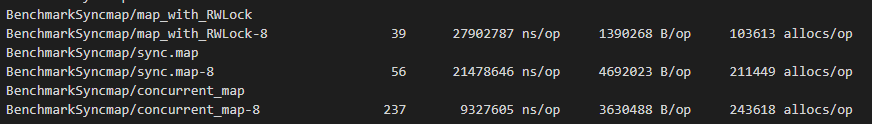

2.当NumOfReader=100,NumOfWriter=200时

2.当NumOfReader=100,NumOfWriter=200时

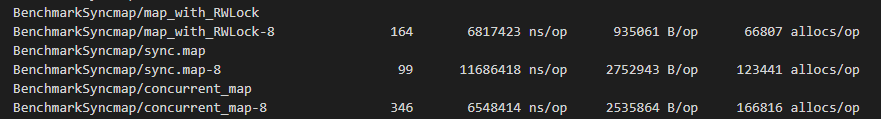

3.当NumOfReader=200,NumOfWriter=100时

3.当NumOfReader=200,NumOfWriter=100时

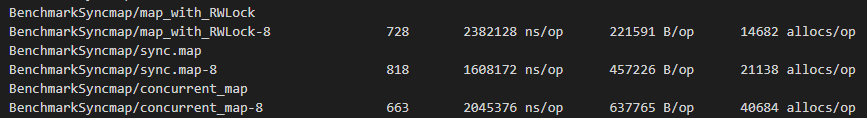

4.当NumOfReader=100,NumOfWriter=10时

4.当NumOfReader=100,NumOfWriter=10时

从测试结果可以得到写多读少的时候使用concurrent_map,读多写少时使用sync.map。

从测试结果可以得到写多读少的时候使用concurrent_map,读多写少时使用sync.map。

总结

- 减少锁的影响范围

- 减少发⽣锁冲突的概率

- sync.Map

- ConcurrentMap

- 避免锁的使⽤

- LAMX Disruptor:https://martinfowler.com/articles/lmax.html

编写GC友好的代码

复杂对象尽量传递引用

- 数组的传递

- 结构体的传递

如下测试程序可以看到数组传递和指针传递的结果:

const NumOfElems = 1000

type Content struct {

Detail [10000]int

}

func withValue(arr [NumOfElems]Content) int {

// fmt.Println(&arr[2])

return 0

}

func withReference(arr *[NumOfElems]Content) int {

//b := *arr

// fmt.Println(&arr[2])

return 0

}

func TestFn(t *testing.T) {

var arr [NumOfElems]Content

//fmt.Println(&arr[2])

withValue(arr)

withReference(&arr)

}

func BenchmarkPassingArrayWithValue(b *testing.B) {

var arr [NumOfElems]Content

b.ResetTimer()

for i := 0; i < b.N; i++ {

withValue(arr)

}

b.StopTimer()

}

//76 14751696 ns/op 80003074 B/op 1 allocs/op

func BenchmarkPassingArrayWithRef(b *testing.B) {

var arr [NumOfElems]Content

b.ResetTimer()

for i := 0; i < b.N; i++ {

withReference(&arr)

}

b.StopTimer()

}

//1000000000 0.305 ns/op 0 B/op 0 allocs/op

可以看到性能差距是巨大的,平常的编程中应该避免值复制。

打开GC日志

只要在程序执⾏之前加上环境变量GODEBUG=gctrace=1

如:GODEBUG=gctrace=1 go test -bench=. GODEBUG=gctrace=1 go run main.go

⽇志详细信息参考:https://godoc.org/runtime

go tool trace

普通程序输出 trace 信息

import (

"os"

"runtime/trace"

)

func main() {

f, err := os.Create("trace.out")

if err != nil {

panic(err)

}

defer f.Close()

err = trace.Start(f)

if err != nil {

panic(err)

}

defer trace.Stop()

// Your program here

}

测试程序输出 trace 信息: go test -trace trace.out

可视化 trace 信息: go tool trace trace.out

初始化⾄合适的大小

⾃动扩容是有代价的,如下测试程序

const numOfElems = 100000

const times = 1000

func BenchmarkAutoGrow(b *testing.B) {

for i := 0; i < b.N; i++ {

s := []int{}

for j := 0; j < numOfElems; j++ {

s = append(s, j)

}

}

}

//2022 590903 ns/op 4654344 B/op 30 allocs/op

func BenchmarkProperInit(b *testing.B) {

for i := 0; i < b.N; i++ {

s := make([]int, 0, numOfElems)

for j := 0; j < numOfElems; j++ {

s = append(s, j)

}

}

}

//7077 163259 ns/op 802820 B/op 1 allocs/op

func BenchmarkOverSizeInit(b *testing.B) {

for i := 0; i < b.N; i++ {

s := make([]int, 0, numOfElems*8)

for j := 0; j < numOfElems; j++ {

s = append(s, j)

}

}

}

//1582 704184 ns/op 6406155 B/op 1 allocs/op

从结果我们可以看到初始化过小会有自动扩容的代价,而初始化过大也会对性能有影响。我们编写程序时,如果能知道切片的大小,初始化合理的值是最佳的。

高效的字符串连接

字符串的连接在开发中是很常见的,下面我们比较一下各种连接方式的性能:

const numbers = 100

func BenchmarkSprintf(b *testing.B) {

b.ResetTimer()

for idx := 0; idx < b.N; idx++ {

var s string

for i := 0; i < numbers; i++ {

s = fmt.Sprintf("%v%v", s, i)

}

}

b.StopTimer()

}

//64848 23398 ns/op 11363 B/op 198 allocs/op

func BenchmarkStringBuilder(b *testing.B) {

b.ResetTimer()

for idx := 0; idx < b.N; idx++ {

var builder strings.Builder

for i := 0; i < numbers; i++ {

builder.WriteString(strconv.Itoa(i))

}

_ = builder.String()

}

b.StopTimer()

}

//668382 1651 ns/op 504 B/op 6 allocs/op

func BenchmarkBytesBuf(b *testing.B) {

b.ResetTimer()

for idx := 0; idx < b.N; idx++ {

var buf bytes.Buffer

for i := 0; i < numbers; i++ {

buf.WriteString(strconv.Itoa(i))

}

_ = buf.String()

}

b.StopTimer()

}

//812496 1824 ns/op 688 B/op 4 allocs/op

func BenchmarkStringAdd(b *testing.B) {

b.ResetTimer()

for idx := 0; idx < b.N; idx++ {

var s string

for i := 0; i < numbers; i++ {

s += strconv.Itoa(i)

}

}

b.StopTimer()

}

//171823 7720 ns/op 9776 B/op 99 allocs/op

可以看到性能最好的是StringBuilder, SytesBuf差距不太大,其他2种性能较差。平常开发中建议使用StringBuilder。